House Report on EU Censorship and Election Interference

Brussels bureaucrats are censoring Americans and interfering in our elections

Below is a brief overview of the executive summary of the Congressional report: “THE FOREIGN CENSORSHIP THREAT, PART II: EUROPE’S DECADE-LONG CAMPAIGN TO CENSOR THE GLOBAL INTERNET AND HOW IT HARMS AMERICAN SPEECH IN THE UNITED STATES. ” This is from the Interim Staff Report of the Committee on the Judiciary of the U.S. House of Representatives.

This is the link to the full 139-page congressional report from the U.S. House Judiciary Committee published February 3, 2026.

Here’s a brief overview of its contents:

Overview:

Title: “The Foreign Censorship Threat, Part II: Europe’s Decade-Long Campaign to Censor the Global Internet and How It Harms American Speech in the United States”

Main Findings:

This Congressional investigation exposes how European Union bureaucrats weaponized their Digital Services Act (DSA) to suppress free speech on American tech platforms, silencing Americans in their own country.

Key Points:

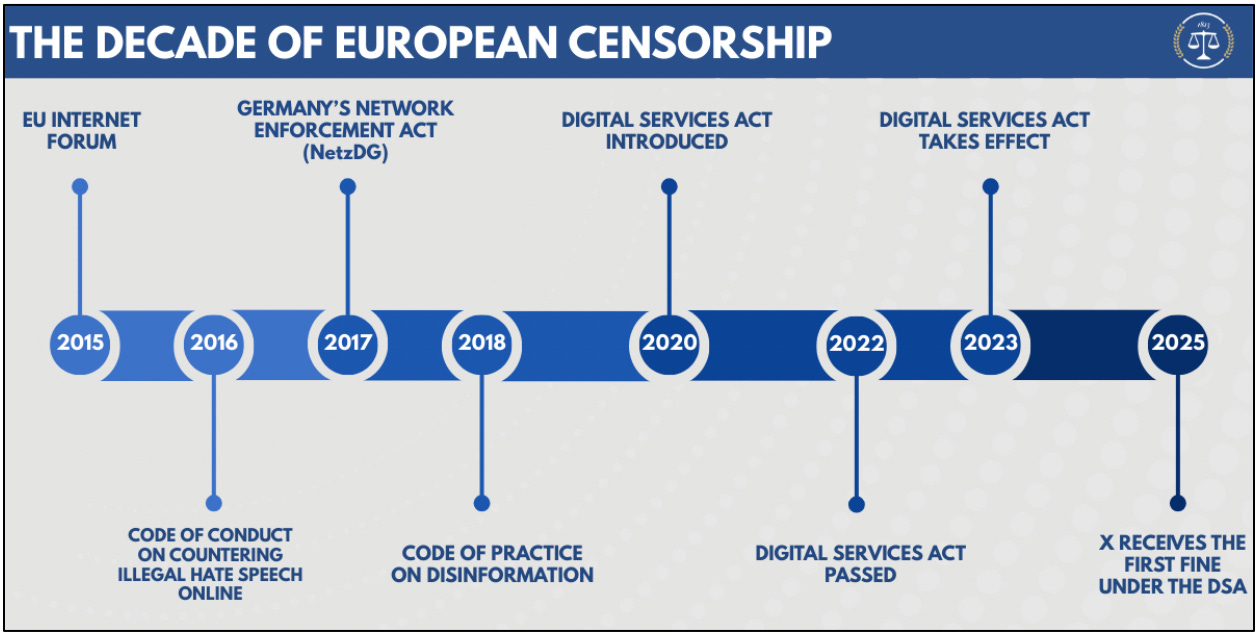

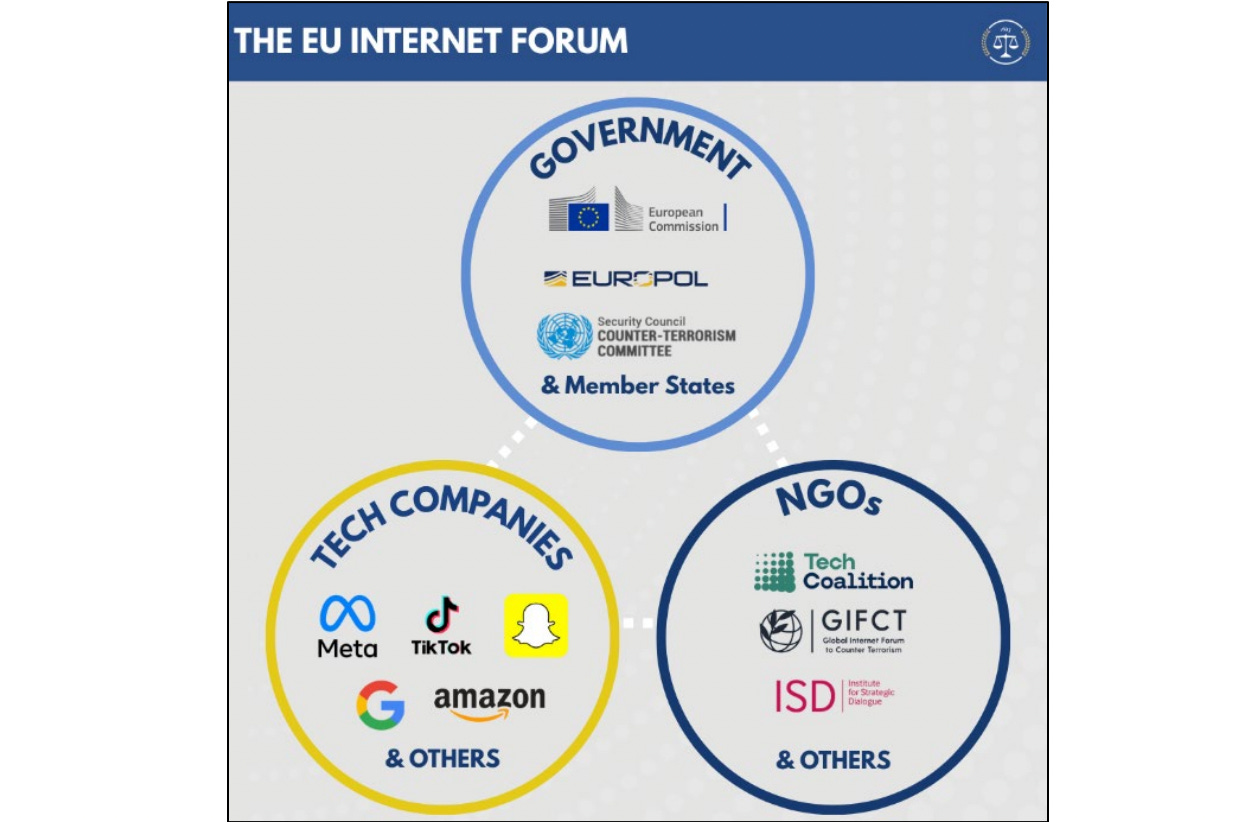

A Decade of Censorship: From 2015 forward, Brussels built a censorship apparatus through the EU Internet Forum, Hate Speech Code (2016), and Disinformation Code (2018), all laying groundwork for the DSA (2022).

Coercion Disguised as Compliance: The EU’s supposedly “voluntary” codes were anything but. Companies faced a stark choice: censor content or face crippling fines up to 6% of global revenue.

Targeting Conservative Speech: EU regulators systematically suppressed lawful political speech on COVID-19, vaccines, immigration, LGBTQ ideology, “populist rhetoric,” political satire, and “anti-government” viewpoints.

Censoring Americans: Because Big Tech uses global content policies, European censorship demands directly silence American citizens on U.S. soil—a brazen assault on our First Amendment rights.

Election Manipulation: The EU meddled in elections worldwide, including America’s 2024 presidential race, pressuring platforms to suppress conservative voices and manipulate electoral outcomes.

Smoking Gun Evidence: Subpoenas to ten tech giants uncovered thousands of documents proving this censorship conspiracy.

Bottom Line: This report proves Brussels bureaucrats are censoring Americans and interfering in our elections. Congress must act immediately to protect our constitutional rights from foreign censorship regimes.

Of interest, mainstream media almost completely ignored the report. European newspapers, concentrated on the fact that specific bureaucrats were named as censoring and the names were not redacted. The horror.!

EXECUTIVE SUMMARY:

By the Committee on the Judiciary of the U.S. House of Representatives

February 3, 2026

The Committee on the Judiciary of the U.S. House of Representatives is investigating how and to what extent foreign laws, regulations, and judicial orders compel, coerce, or influence companies to censor speech in the United States. As part of this oversight, the Committee has issued document subpoenas to ten technology companies, requiring them to produce communications with foreign governments, including the European Commission and European Union (EU) Member States, regarding content moderation.

In July 2025, the Committee published a report detailing how the European Commission—the executive arm of the EU—weaponizes the Digital Services Act (DSA), a law regulating online speech, to impose global online censorship requirements on political speech, humor, and satire.

Since then, pursuant to subpoena, technology companies have produced to the Committee thousands of internal documents and communications with the European Commission. These documents show the extent—and success—of the European Commission’s global censorship campaign. The European Commission, in a comprehensive decade-long effort, has successfully pressured social media platforms to change their global content moderation rules, thereby directly infringing on Americans’ online speech in the United States. Though often framed as combating so-called “hate speech” or “disinformation,” the European Commission worked to censor true information and political speech about some of the most important policy debates in recent history—including the COVID-19 pandemic, mass migration, and transgender issues.

After ten years, the European Commission has established sufficient control of global online speech to comprehensively suppress narratives that threaten the European Commission’s power. Prior to the Committee’s subpoenas, these efforts largely occurred in secret. Now, the European Commission’s efforts have come to light for the first time, informing the Committee on legislative steps it can take to protect American free speech online.

The DSA is the culmination of a decade-long European effort to silence political opposition and suppress online narratives that criticize the political establishment.

The DSA took effect in 2023, and the European Commission issued the first-ever fine under the DSA in December 2025 against X. Although the DSA has been in effect for less than three years, the fine against X represents the culmination of a decade-long effort by the European Commission to control the global internet in order to suppress disfavored narratives online.

The internet and social media initially promised to be a force that would democratize speech, and with it, political power. This development threatened the established political order, and by the mid-2010s, the political establishments in the United States and Europe sought to counter rising populist movements that questioned deeply unpopular policies such as mass migration. Recognizing that tackling this problem would take several years, starting in 2015 and 2016, the European Commission began creating various forums in which European regulators could meet directly with technology platforms to discuss how and what content should be moderated. Though ostensibly meant to combat “misinformation” and “hate speech,” nonpublic documents produced to the Committee show that for the last ten years, the European Commission has directly pressured platforms to censor lawful, political speech in the European Union and abroad.

The EU Internet Forum (EUIF), founded in 2015 by the European Commission’s Directorate-General for Migration and Home Affairs (DG-Home), was among the first of these initiatives. By 2023, EUIF published a “handbook . . . for use by tech companies when moderating” lawful, non-violative speech such as:

“Populist rhetoric”

“Anti-government/anti-EU” content

“Anti-elite” content

“Political satire”

“Anti-migrants and Islamophobic content”

“Anti-refugee/immigrant sentiment”

“Anti-LGBTIQ . . . content” and

“Meme subculture.”

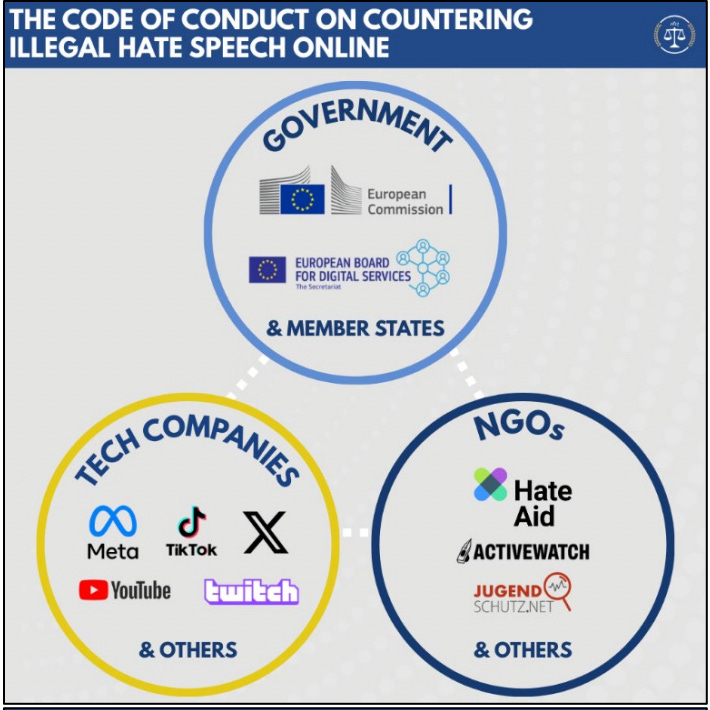

The European Commission also enforced its censorship goals through allegedly voluntary “codes of conduct” on hate speech and disinformation. In 2016, the European Commission established a “Code of Conduct on Countering Illegal Hate Speech Online,” under which platforms including Facebook, Instagram, TikTok, and Twitter (now X) promised to censor vaguely defined “hateful conduct.”

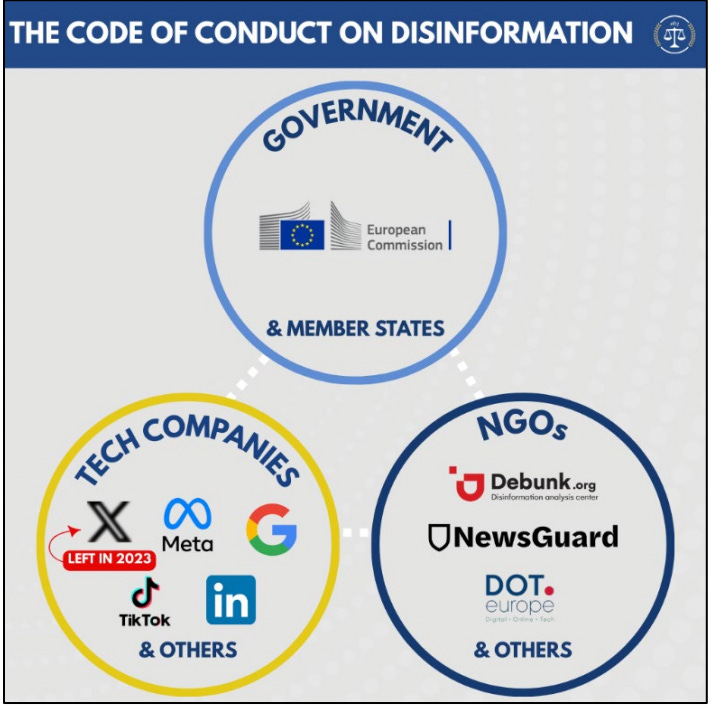

A “Code of Practice on Disinformation,” in which the same major platforms promised to “dilute the visibility” of alleged “disinformation,” followed in 2018. In high-level meetings with platforms, senior European Commission officials explicitly told the platforms that the Hate Speech and Disinformation Codes were intended to “fill [the] regulatory gap” until the EU could enact binding legislation governing platform “content moderation.”

At around the same time, the most powerful EU Member States, such as Germany, began enacting censorship legislation at the national level.

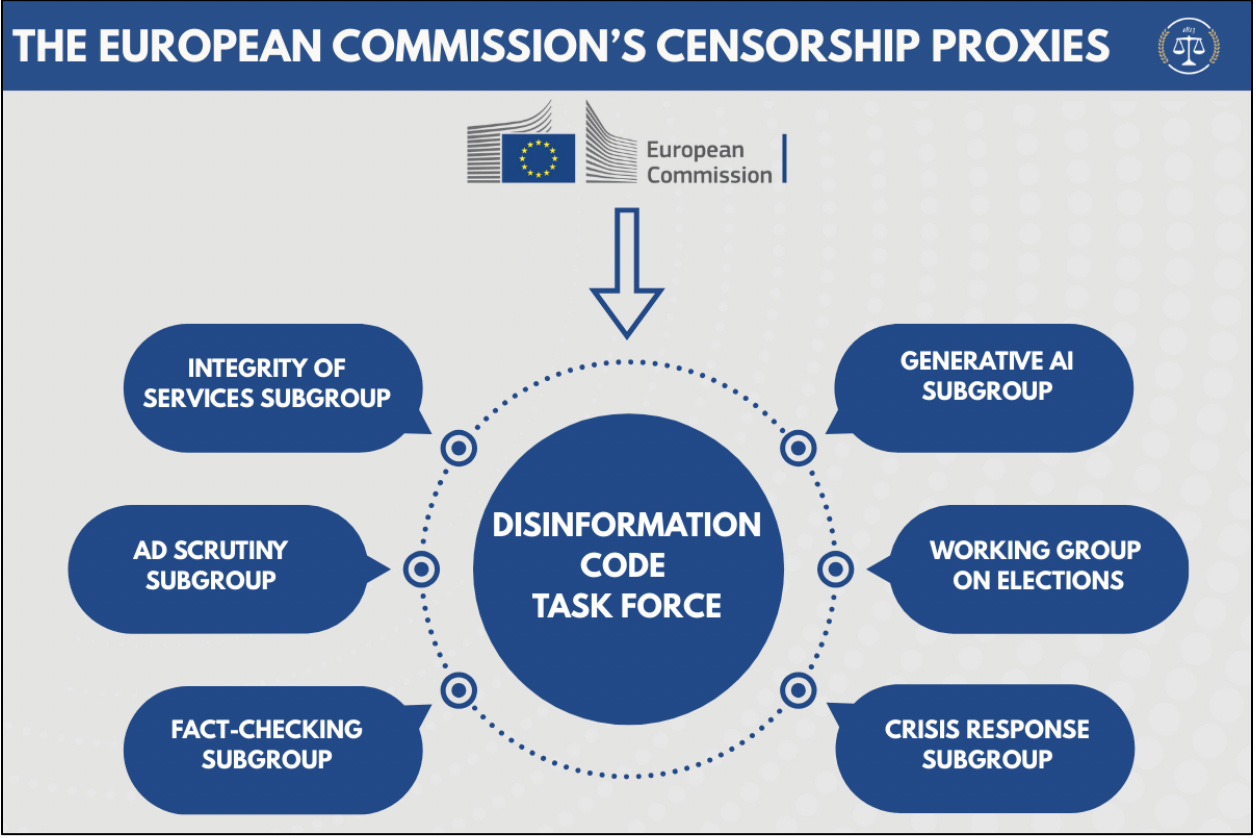

Later, in 2022 and right as the DSA was about to take effect, the European Commission updated the 2018 Disinformation Code. Under the new guidelines, platforms had to participate in a Disinformation Code “Task Force,” which would meet regularly to discuss platforms’ approach to censoring so-called disinformation. The Task Force broke into six “subgroups” focusing on specific disinformation topics, including fact-checking, elections, and demonetization of conservative news outlets. Across all of these subgroups, there were more than 90 meetings between platforms, censorious civil society organizations (CSOs), and European Commission regulators between late 2022 and 2024.

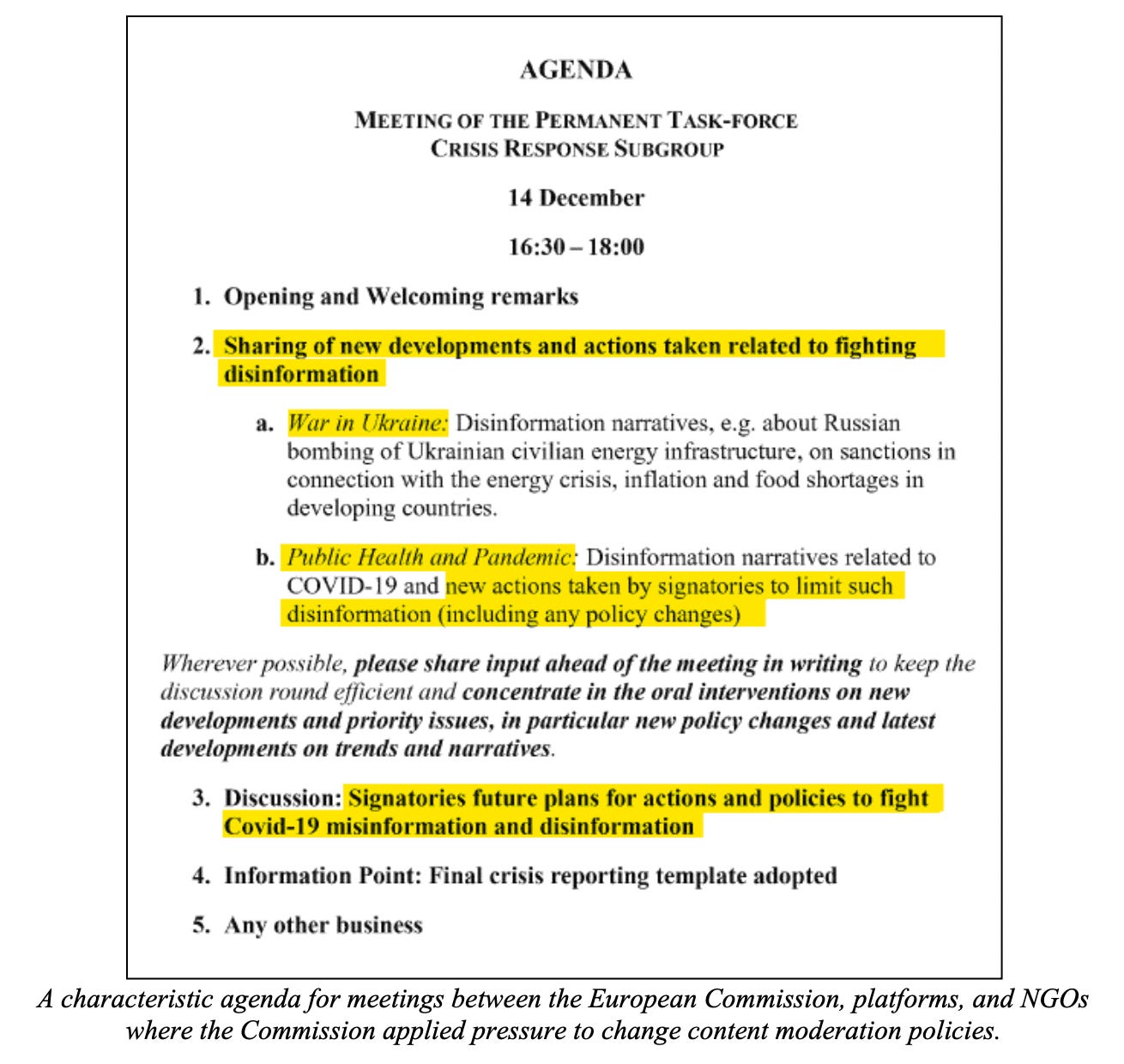

These meetings were a key forum for European Commission regulators to pressure platforms to change their content moderation rules and take additional censorship steps. For example, in over a dozen meetings of the Crisis Response Subgroup, the European Commission inquired about platforms’ “policy changes” “related to fighting disinformation.”

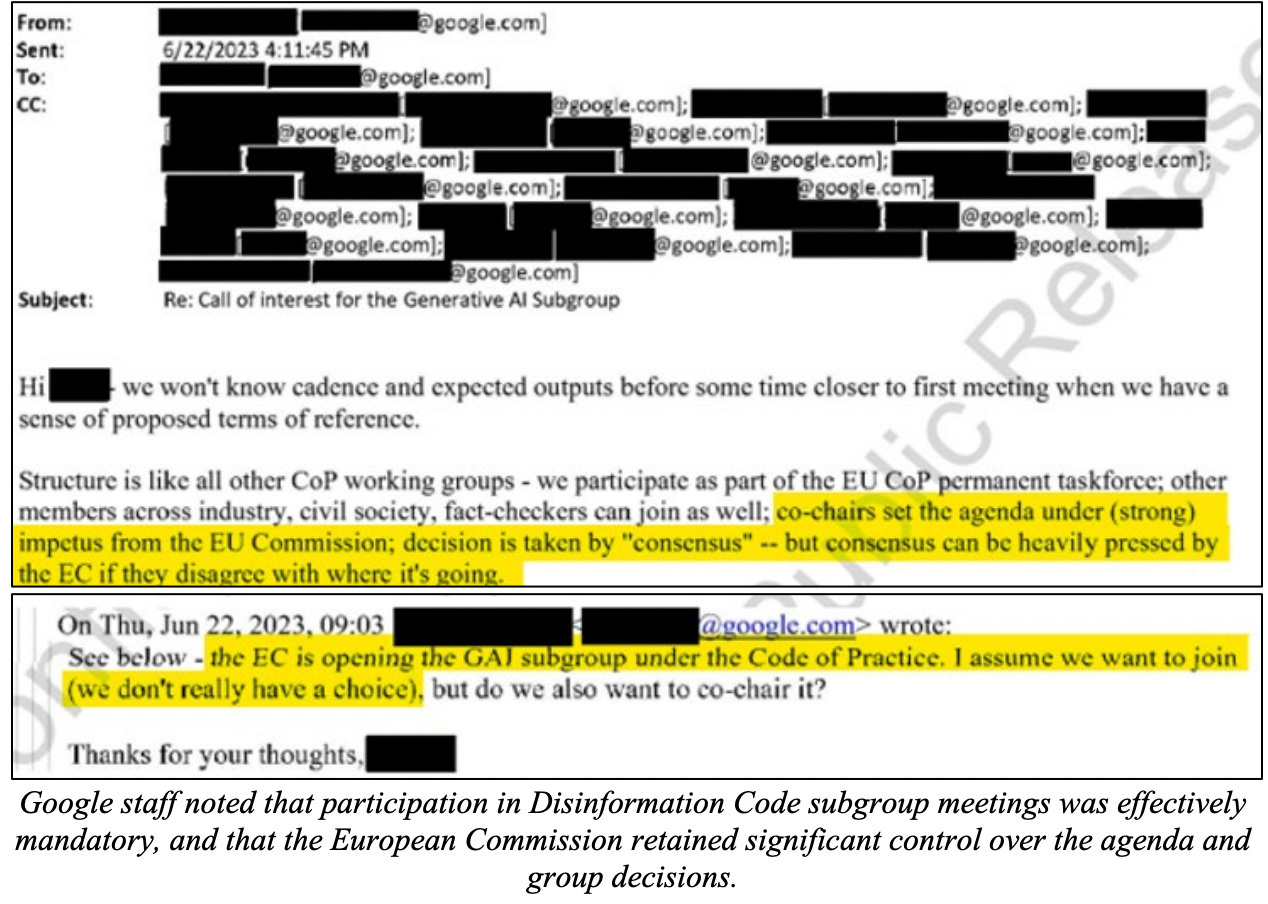

The “voluntary” and “consensus-driven” European censorship regulatory regime is neither voluntary nor consensus-driven.

Both before and after the DSA’s enactment, the European Commission established several forums to engage regularly with platforms about content moderation, including the Hate Speech Code and the Disinformation Code. These forums, which collectively held more than 100 meetings where regulators had the opportunity to pressure platforms to censor content more aggressively, were purportedly voluntary and intended to achieve “consensus” through a so called regulatory dialogue. None of that was true. As internal company emails bluntly reveal, the companies knew that they “[didn’t] really have a choice” whether to join these voluntary initiatives. And the European regulators were running the show: agendas were set “under (strong) impetus from the EU Commission” and so-called “consensus” was achieved under heavy pressure from the European Commission.

The European Commission successfully pressured major social media platforms to change their global content moderation rules, directly infringing on American online speech in the United States.

Most major social media or video sharing platforms are based in the United States16 and have a single, global set of rules governing what content can or cannot be posted on the site. These rules set the boundary for what discourse is allowed in the modern town square, making them a key pressure point for regulators seeking narrative control to tighten their grip on political power. Critically, platform content moderation rules are—and effectively must be—global in scope. Country-by-country content moderation is a significant privacy threat, requiring platforms to know and store each user’s specific location every time he or she logs on. In an age where users can freely use virtual private networks (VPNs) to simulate their location and protect their personal information, country-by-country content moderation is also ineffective— in addition to creating immense costs for platforms of all sizes. The internet is global, and platforms govern themselves accordingly. That means that when European regulators pressure social media companies to change their content moderation rules, it affects what Americans can say and see online in the United States. European censorship laws affecting content moderation rules are therefore a direct threat to U.S. free speech.

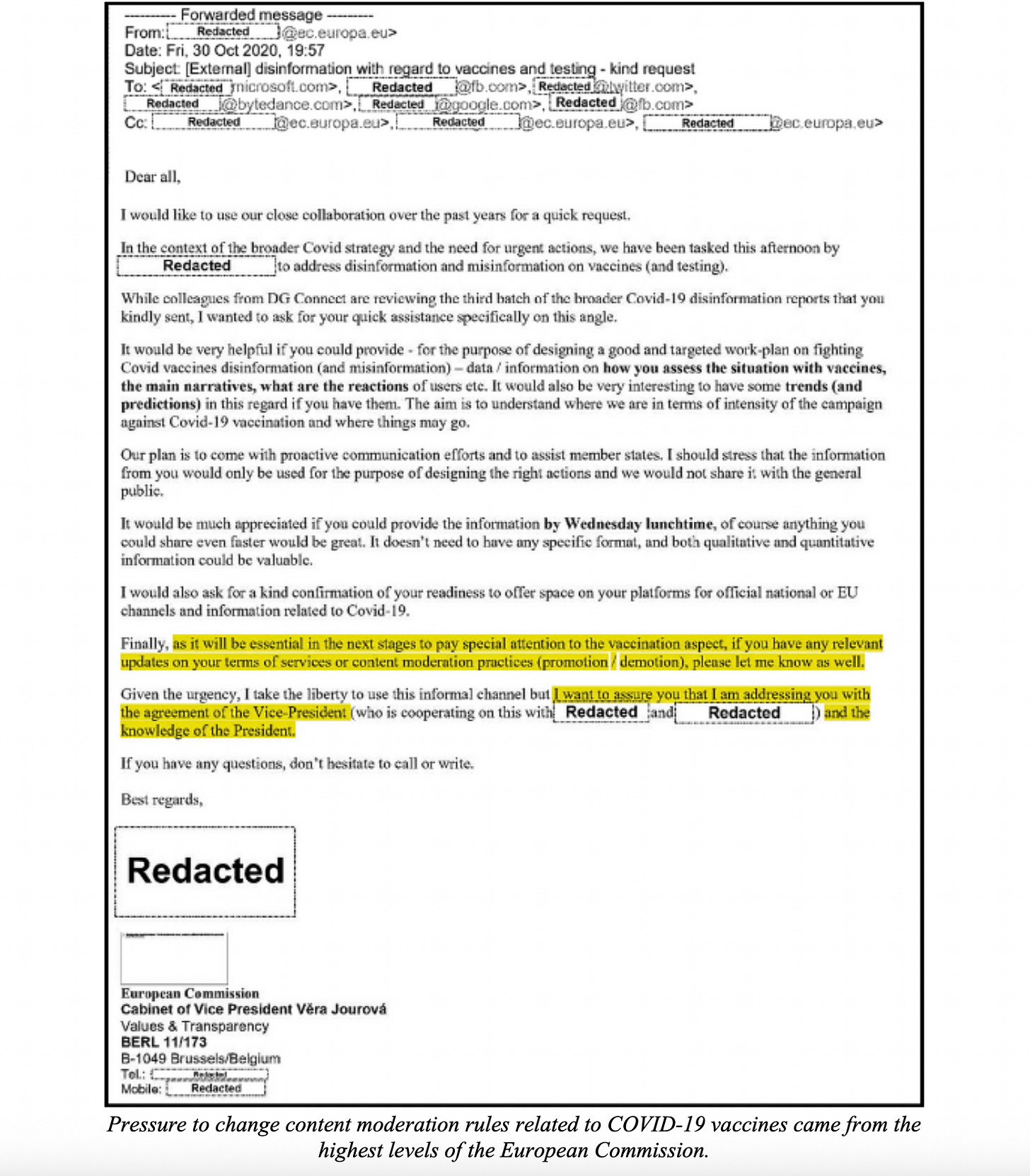

Years before the DSA’s enactment, the European Commission made these platform content moderation rules its primary target. During the COVID-19 pandemic, senior European Commission officials pressed platforms to change their content moderation rules to globally censor content questioning established narratives about the virus and the vaccine. With the approval of EU President Ursula von der Leyen and Vice President Vera Jourova, the European Commission asked platforms how they planned to “update[] . . . [their] terms of service or content moderation practices (promotion / demotion)” ahead of the rollout of COVID-19 vaccines.

Throughout the European Commission’s censorship campaign, the countless Disinformation Code, Hate Speech Code, and EU Internet Forum meetings provided more than 100 opportunities for the European Commission to pressure platforms to modify their content moderation policies and identify which online narratives on vaccines and other important political topics should be censored. For example, on over a dozen occasions over the course of just three years, the European Commission used the Disinformation Code Crisis Response Subgroup meetings to press platforms, such as YouTube and TikTok, on their “new developments and actions related to fighting disinformation,” specifically referencing “policy changes.”

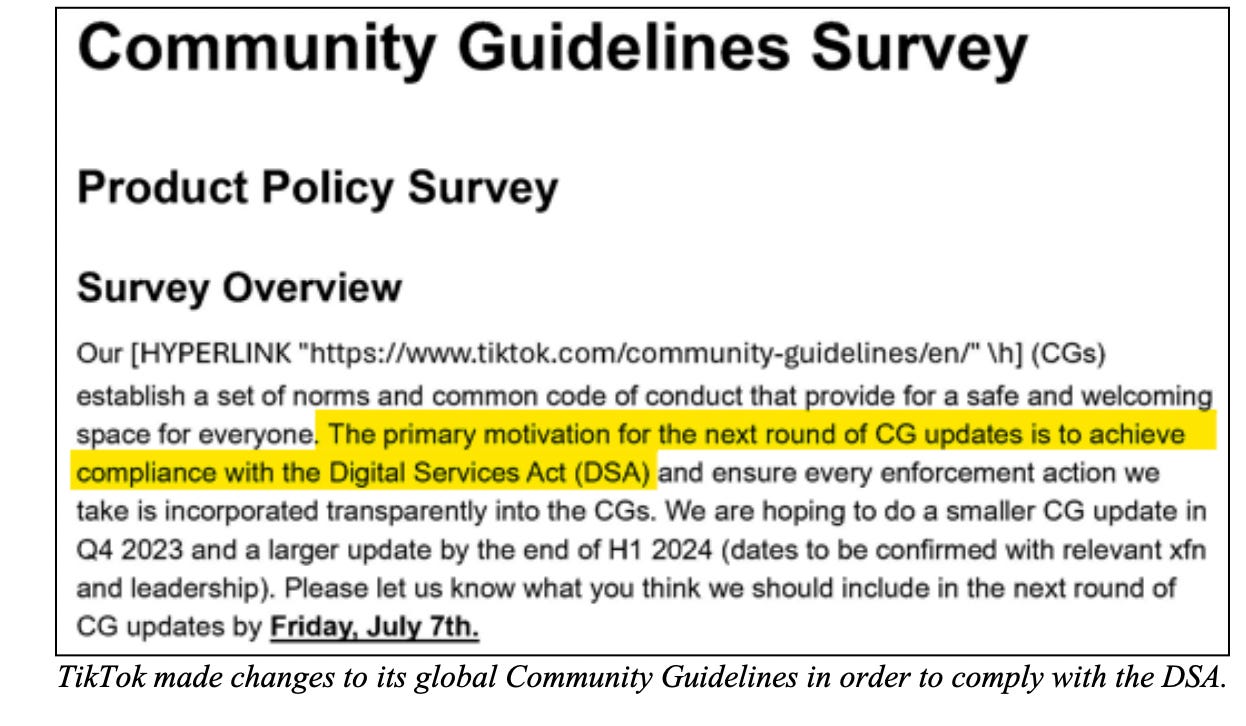

The pressure on platforms to comply with Europe’s censorship demands only intensified once the DSA was signed into law in October 2022. The European Commission warned platforms that they needed to change their global content moderation rules to comply with the DSA, or else risk fines up to six percent of global revenue and a possible ban from the European market. This decade-long pressure campaign was successful: platforms changed their content moderation rules and censored speech worldwide in direct response to the DSA and European Commission pressure. For example, in 2023, TikTok began editing its global Community Guidelines for the express purpose of “achiev[ing] compliance with the Digital Services Act.”

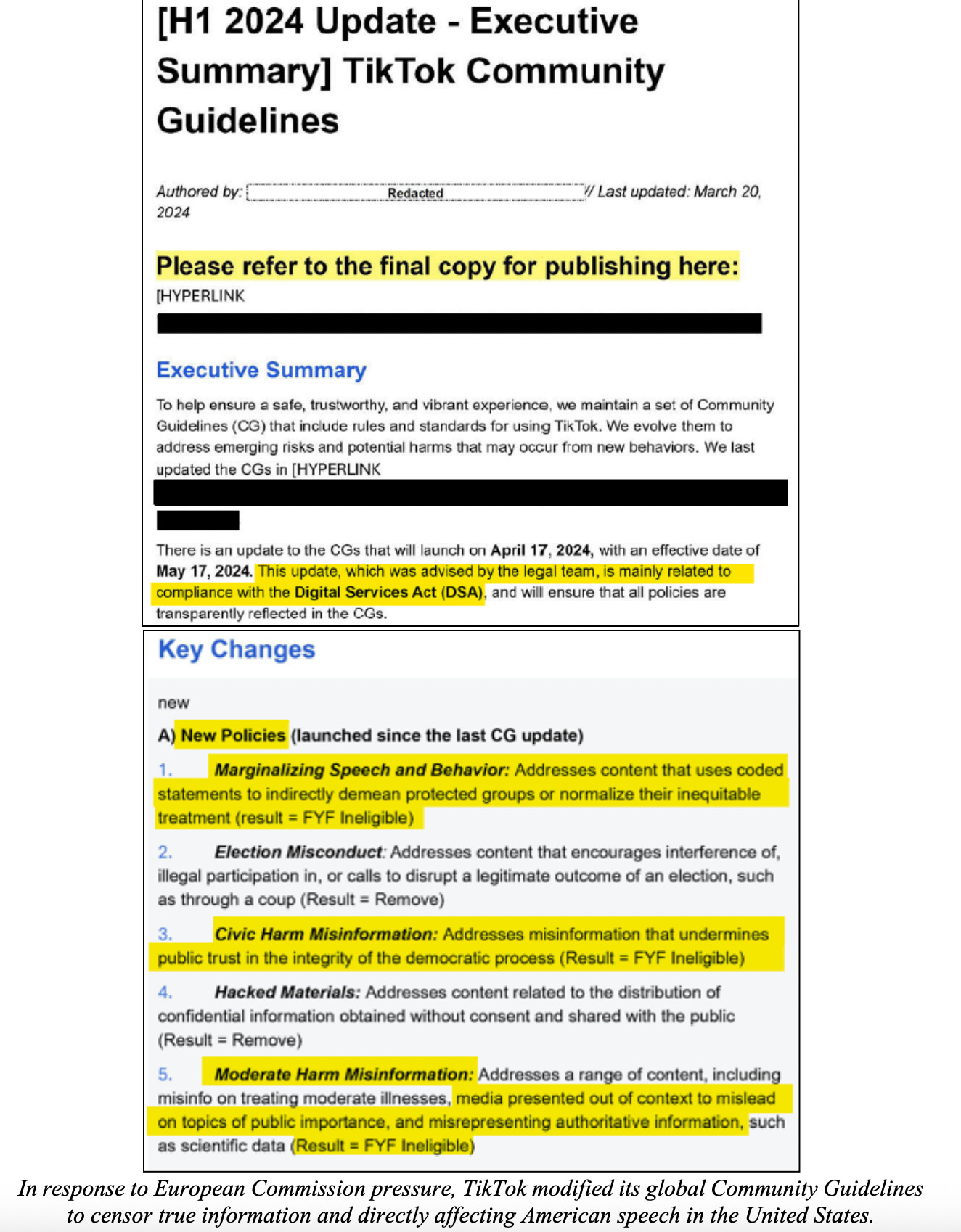

These new censorship rules went into effect in 2024. In response to the European Commission’s decade-long censorship campaign, TikTok instituted new rules censoring “marginalizing speech,” including “coded statements” that “normalize inequitable treatment,” “misinformation that undermines public trust,” “media presented out of context” and “misrepresent[ed] authoritative information.” These standards are inherently subjective and easily weaponized against the European Commission’s political opposition. In fact, these internal documents show that TikTok systematically censored true information around the world to comply with the European Commission’s censorship demands under the DSA. The document outlining these changes confirmed that, as “advised by the legal team,” the updates were “mainly related to compliance with the Digital Services Act (DSA).”

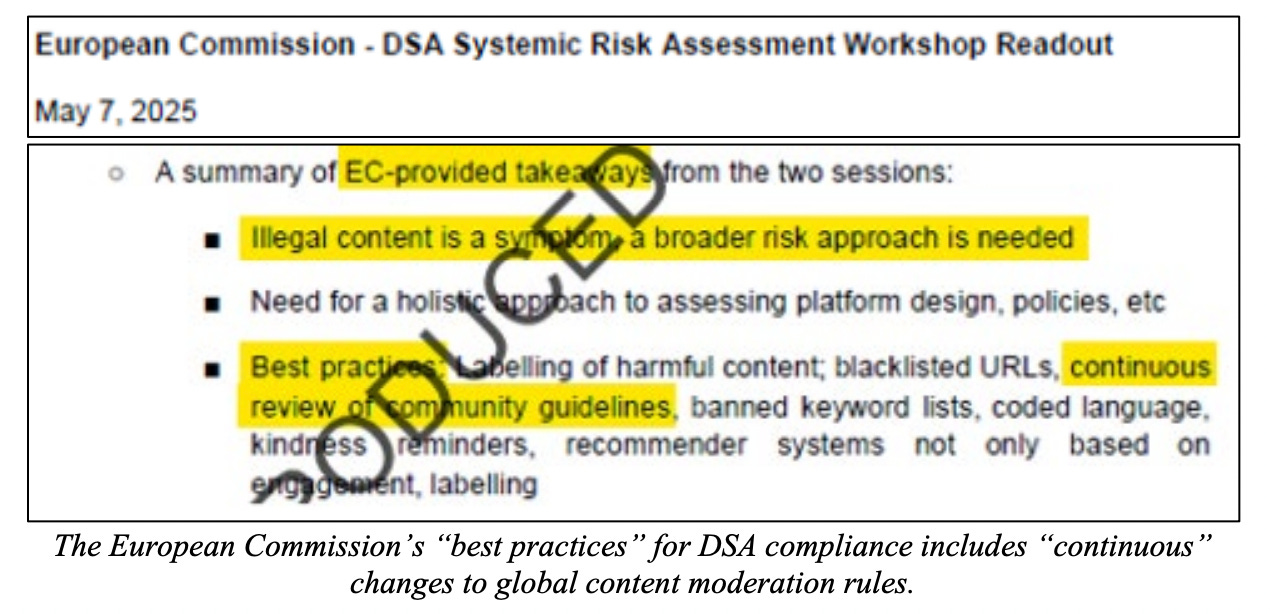

Documents indicate that these may not have been the only content moderation changes instituted in response to the DSA, either. During a presentation to the European Commission in July 2023, TikTok noted that “units with day-to-day activities overlapping the DSA, like Trust & Safety . . . [were] given new policies, rules, & [standard operating procedures]” to comply with the DSA. These internal documents suggest that TikTok changed significant portions of its extensive content moderation systems to comply with the European Commission’s demands. The European Commission’s focus on global content moderation rules remains: in May 2025, the European Commission explicitly told platforms at a closed-door “DSA Workshop” that “continuous review of [global] community guidelines” was a best practice for compliance with the DSA.

The European Commission is specifically focused on censorship of U.S. content.

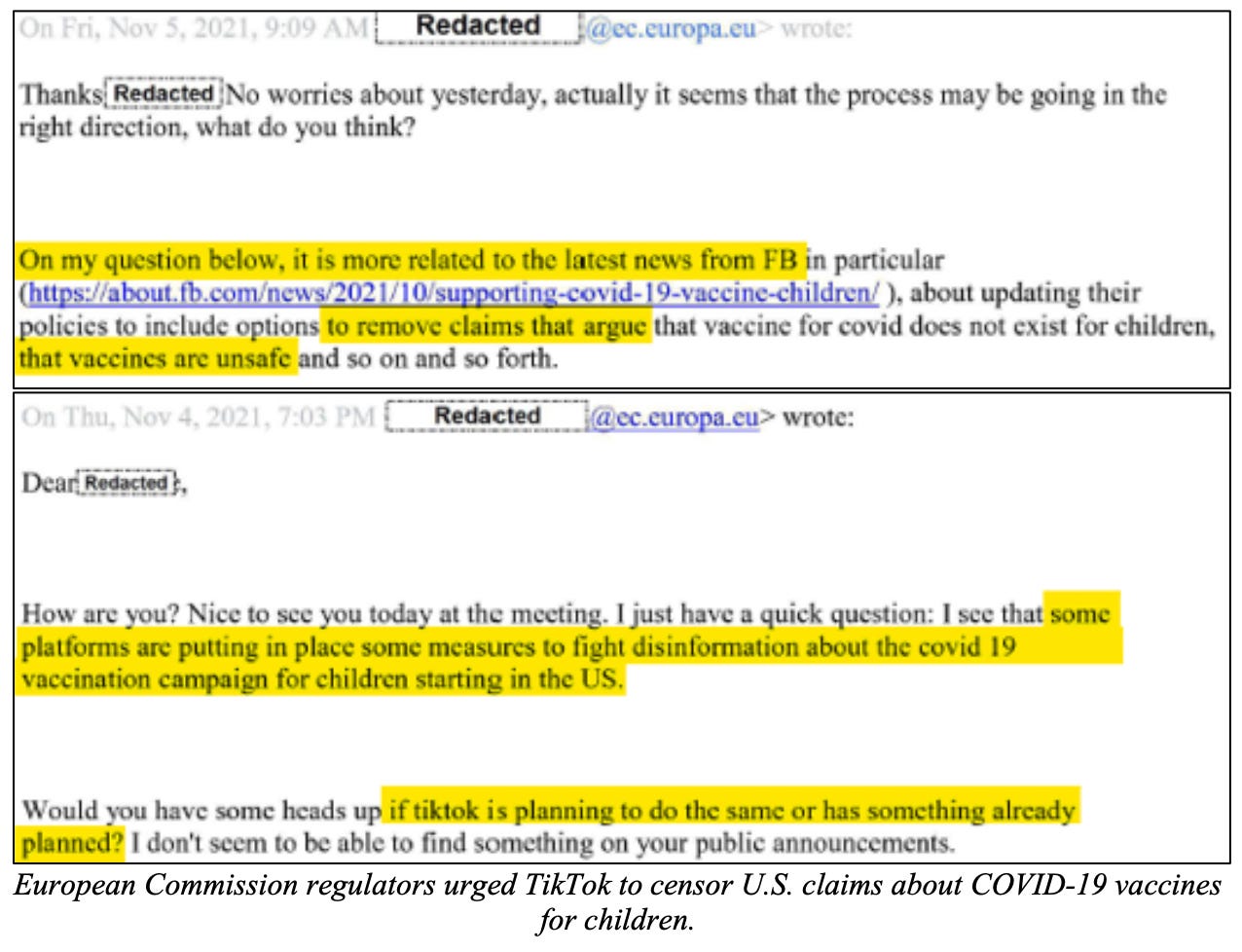

Not only did the European Commission harm American speech in the United States by pressuring platforms to change their global content moderation policies, but it also specifically sought to censor American content. This, too, began during the COVID-19 pandemic. In November 2021, the European Commission requested information about how TikTok planned to “fight disinformation about the covid 19 vaccination campaign for children starting in the US,” inquiring specifically about TikTok’s plans to “remove” certain “claims” about the efficacy of the COVID-19 vaccine in children.

A year later, European Commission regulators pressured platforms to remove an American documentary film about vaccines, demanding that YouTube, Twitter, and TikTok “check . . . internally” and respond “in writing” why the film had not been censored. YouTube responded to the European Commission promptly, stating that it “removed” the film in question after the European Commission raised the issue. Put plainly, the European Commission treated American debates around vaccination as within scope of the European Commission’s regulatory authority.

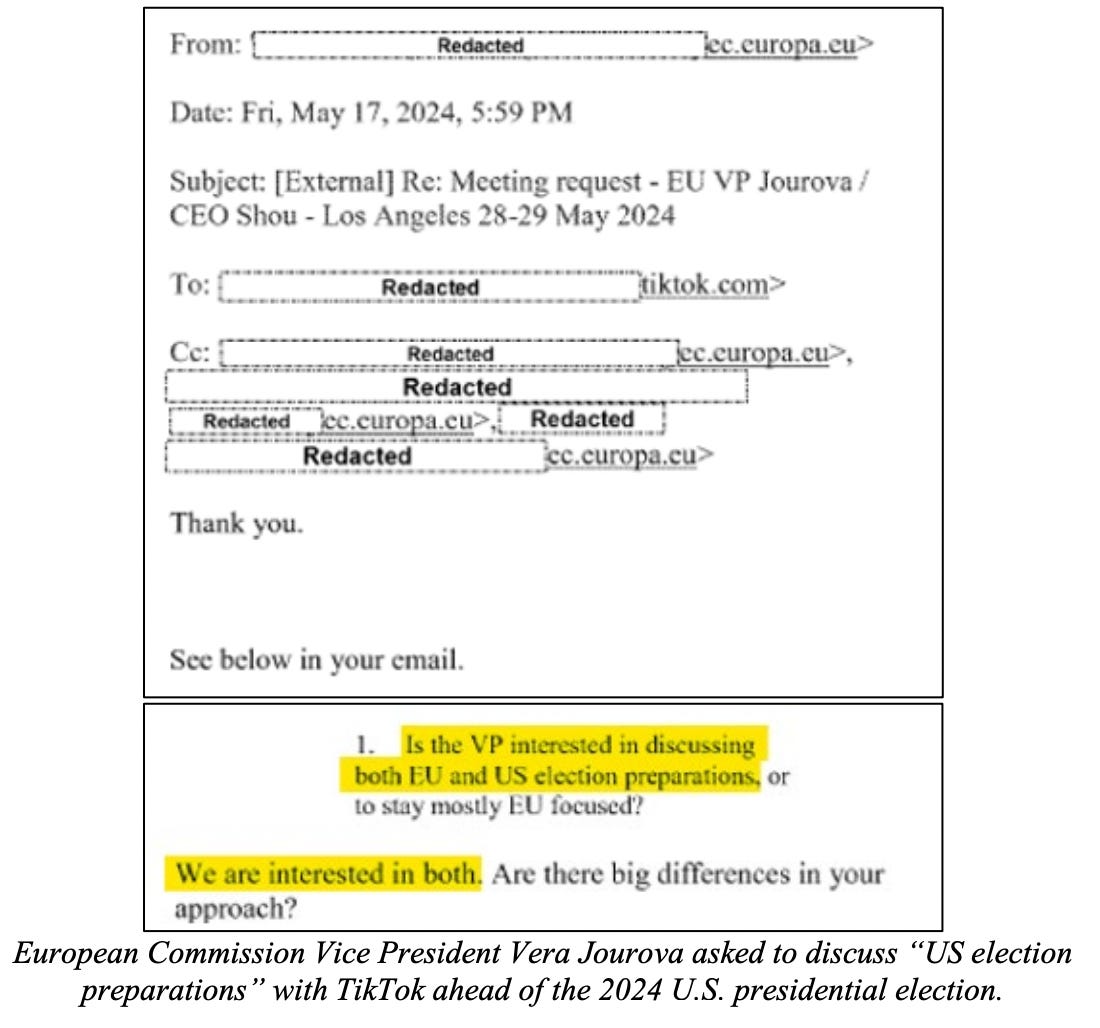

The European Commission’s focus on American speech was not limited to only COVID19-related content, either. Political appointees at the highest levels of the European Commission pressured TikTok to more aggressively censor U.S. content ahead of the 2024 U.S. presidential election.

Most infamously, then-EU Commissioner for Internal Market Thierry Breton sent a letter to X owner Elon Musk in August 2024 ahead of Musk’s interview with President Donald Trump.

Breton threatened X with regulatory retaliation under the DSA for hosting a live interview with President Trump in the United States, warning that “spillovers” of U.S. speech into the EU could spur the Commission to adopt retaliatory “measures” against X under the DSA. Breton threatened that the European Commission “[would] not hesitate to make full use of [its] toolbox” to silence this core American political speech.37 In response to Breton’s threats, the Committee sent two letters outlining how his threats undermined free speech in the United States and constituted election meddling in the American presidential election. Shortly thereafter, Breton resigned.

The European Commission sought to minimize Breton’s letter to Musk as an unapproved freelance from a rogue Commissioner acting alone. But months before Commissioner Breton’s letter, other senior European Commission officials were similarly pressing Big Tech executives for more information on how they planned to moderate election-related speech ahead of the 2024 U.S. presidential election.

In May 2024, European Commission Vice President Jourova traveled to California to meet with major tech platforms. During this trip, Jourova met with TikTok CEO Shou Chew and TikTok’s Head of Trust and Safety to discuss topics including “election preparations.” TikTok sought confirmation on whether the European Commission Vice President was traveling all the way to California to have a meeting that “stay[ed] mostly EU focused,” or whether she wanted to discuss “both EU and US election preparations.” The European Commission confirmed that Vice President Jourova wanted to discuss “both.”

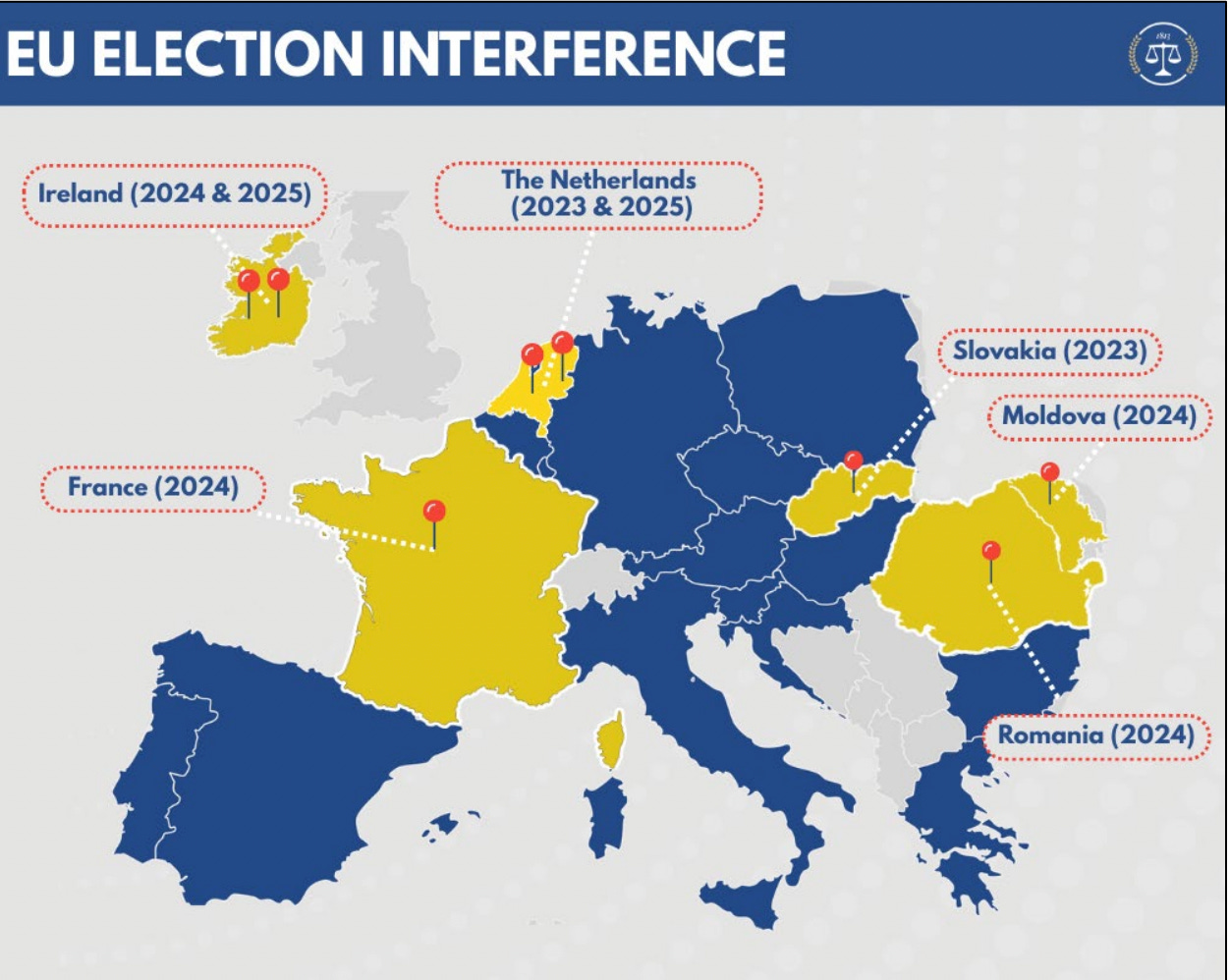

The European Commission regularly interferes in EU Member State national elections.

The European Commission works to influence EU Member States by controlling political speech during election periods. Most strikingly, the European Commission issued DSA Election Guidelines in 2024 requiring platforms to take additional censorship steps ahead of major European elections, such as:

“Updating and refining policies, practices, and algorithms” to comply with EU censorship demands;

Complying with “best practices” outlined in the Disinformation Code, the Hate Speech Code, and EUIF documents;

“Establishing measures to reduce the prominence of disinformation”;

“Adapt[ing] their terms and conditions . . . to significantly decrease the reach and impact of generative AI content that depicts disinformation or misinformation”;

“Label[ing]” posts deemed to be “disinformation” by government-approved, leftwing fact-checkers;

“Developing and applying inoculation measures that pre-emptively build resilience against possible and expected disinformation narratives”; and

Taking additional steps to stop “gendered disinformation.”

These DSA Election Guidelines were branded as voluntary best practices. 46 But behind closed doors, the European Commission made clear that the Election Guidelines were obligatory. Prabhat Agarwal, the head of the Commission’s DSA enforcement unit, described the Guidelines as a floor for DSA compliance, telling platforms that if they deviated from the best practices, they would need to “have alternative measures that are equal or better.” Moreover, the European Commission’s election censorship mandates likely had extraterritorial effects. For example, companies disclose in mandatory reports to the European Commission the company’s standard election-related “policies, tools, and processes.” The European Commission regularly engages with large social media platforms on what election related changes should be made, and hosts DSA-related discussions in non-EU countries.

Since the DSA came into force in 2023, the European Commission has pressured platforms to censor content ahead of national elections in Slovakia, the Netherlands, France, Moldova, Romania, and Ireland, in addition to the EU elections in June 2024. Nonpublic documents produced to the Committee pursuant to subpoena demonstrate how the European Commission regularly pressured platforms ahead of EU Member State national elections in order to disadvantage conservative or populist political parties.

Nonpublic meeting agendas and readouts show that the European Commission regularly convened meetings of national-level regulators, left-wing NGOs, and platforms prior to elections to discuss which political opinions should be censored. The European Commission also helped to organize “rapid response systems” where government-approved third parties were empowered to make priority censorship requests that almost exclusively targeted the ruling party’s opposition. TikTok reported to the European Commission that it censored over 45,000 pieces of alleged “misinformation,” including clear political speech on topics including “migration, climate change, security and defence and LGBTQ rights,” ahead of the 2024 EU elections.5

The 2023 Slovak election is one key example. TikTok’s internal content moderation guides show that TikTok censored the following “hate speech” while facing European censorship pressure:

“There are only two genders”;

“Children cannot be trans”;

“We need to stop the sexualization of young people/children”;

“I think that LGBTI ideology, gender ideology, transgender ideology are a big threat to Slovakia, just like corruption”; and

“Targeted misgendering.”

These statements are not “hate speech”—they are political opinions about a current contentious scientific and medical issue. TikTok itself noted that some of these political opinions were “common in the Slovak political discussions.”55 Yet, under pressure from the European Commission, TikTok censored these claims ahead of Slovakia’s national parliamentary elections.

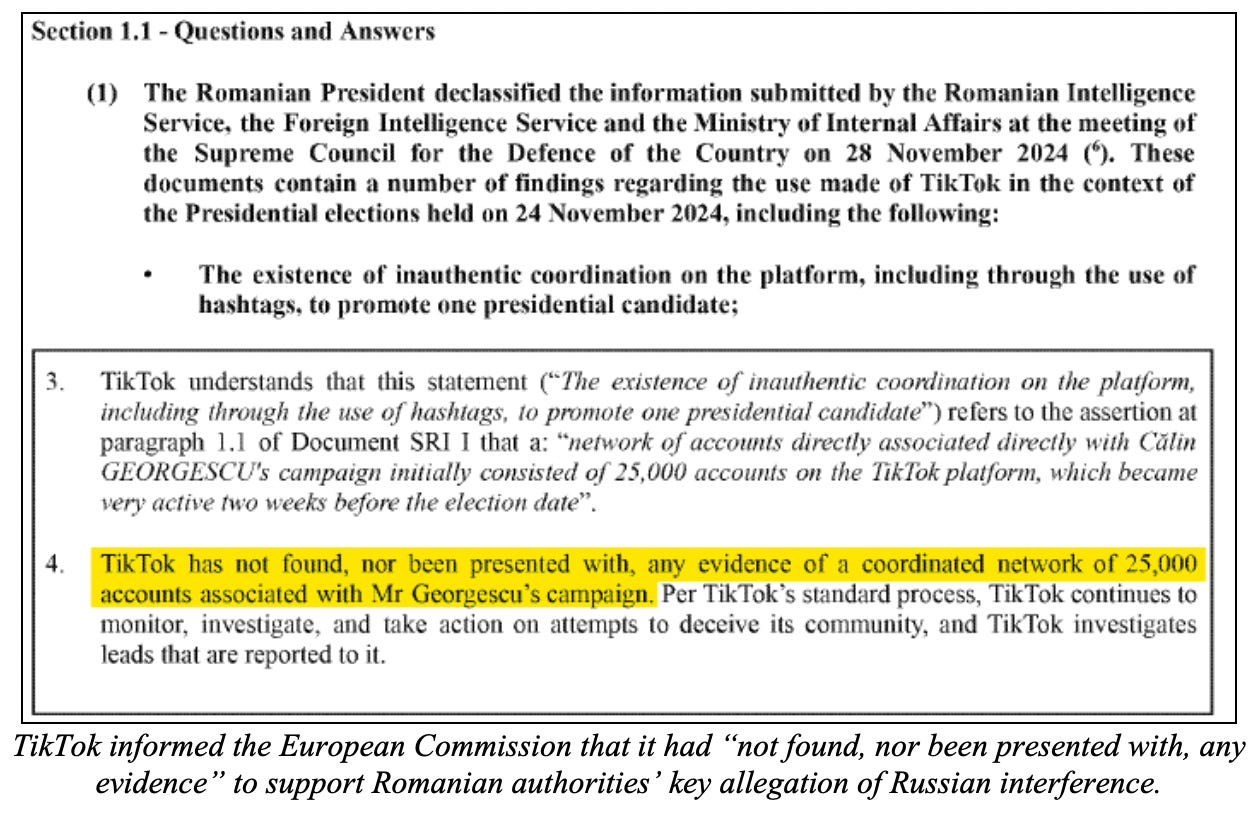

The European Commission took its most aggressive censorship steps during the 2024 Romanian presidential election. In December 2024, Romania’s Constitutional Court annulled the results of the first round of the previous month’s presidential election, won by little-known independent populist candidate Calin Georgescu, after Romanian intelligence services alleged that Russia had covertly supported Georgescu through a coordinated TikTok campaign. Internal TikTok documents produced to the Committee seem to undercut this narrative. In submissions to the European Commission, which used the unproven allegation of Russian interference to investigate TikTok’s content moderation practices, TikTok stated that it “ha[d] not found, nor been presented with, any evidence of a coordinated network of 25,000 accounts associated with Mr. Georgescu’s campaign”—the key allegation by the intelligence authorities.

By late December 2024, media reports citing evidence from Romania’s tax authority found that the alleged Russian interference campaign had, in fact, been funded by another Romanian political party. But the election results were never reinstated, and in May 2025, the establishment-preferred candidate won Romania’s presidency in the rescheduled election.

The European Commission is continuing to weaponize the DSA to censor content beyond its borders.

After a decade of censorship, the European Commission continues to abandon Europe’s historical commitment to free speech. In December 2025, the European Commission issued its first fine under the Digital Services Act, targeting X for a litany of ridiculous violations in an obviously pretextual attempt to penalize the platform for its defense of free speech.

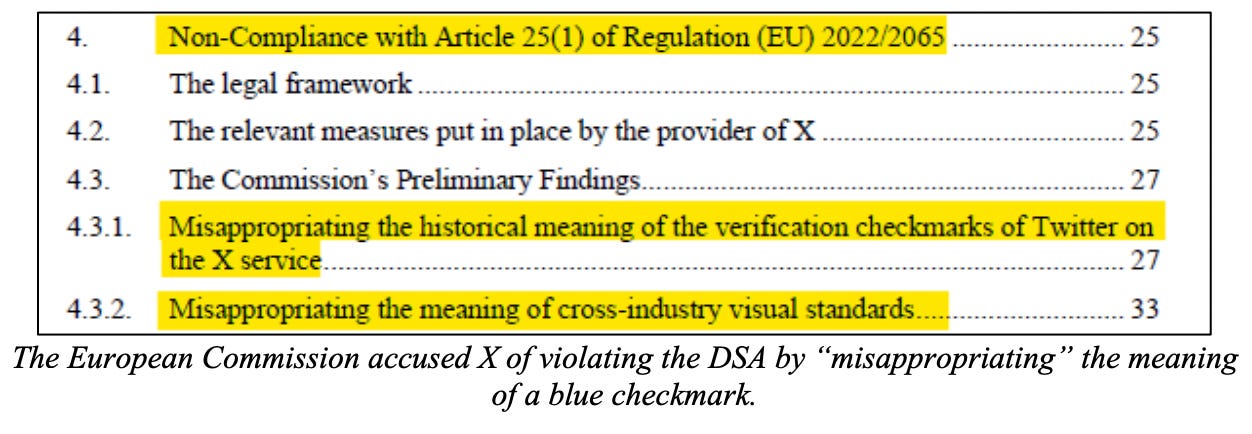

The European Commission fined X €120 million—slightly below the statutory cap of six percent of global revenue—for alleged violations including “misappropriating” the meaning of blue checkmarks by changing how they were awarded. Moreover, despite the European Commission’s protestations that the DSA applies only in the EU,63 its X decision enforced the DSA in an extraterritorial manner. The decision asserts that under the DSA’s researcher access provision, X, an American company, must hand over American data to researchers around the world—all because of a European law. And the European Commission threatened to ban X in the EU if it does not comply with its censorship demands.

The European Commission’s decision to fine X is chilling in at least two distinct ways: it penalizes X for its global defense of free speech, and it claims the authority to enforce the DSA globally. It is everything the Committee has warned about for well over a year.

Two recent EU initiatives also threaten to worsen the European free speech crisis. Under President von der Leyen’s “Democracy Shield,” the European Commission will create at least two new censorship hubs for regulators and left-wing NGOs to pressure platforms to censor conservative content—the European Center for Democratic Resilience and the European Network of Fact-Checkers. Under the same proposal, the European Commission is seeking to expand the Disinformation Code to include requirements related to “user verification tools,” which could effectively end anonymity on the internet by requiring users to show identification in order to create an account. The Commission is also seeking to circumvent normal democratic processes to create a single, expansive definition of illegal “hate speech” across Europe. This would require every EU member state to adopt the Commission’s definition, which includes conventional political discourse and “memes.” The European censorship threat shows no signs of abating.

The Committee is conducting its investigation into foreign censorship laws, regulations, and judicial orders because of the risk they pose to American speech in the United States. The EU’s DSA, in particular, represents a grave danger to American freedom of speech online: the European Commission has intentionally pressured technology companies to change their global content moderation policies, and deliberately targeted American speech and elections. The European Commission’s extraterritorial actions directly infringe on American sovereignty. The Committee will continue to develop legislative solutions to defend against and effectively counter this existential risk to Americans’ most cherished right.

END OF EXECUTIVE SUMMARY

.

Two points. To hell with europe. We should set up a US commission and fine european entities til they bleed for every imposition into US free speech. Then there is the problem of the left leaning tendency of silicon valley, so prevalent that its migration to the Texas hill country changed the I35 corridor from solid red to purple in a mere 4 yrs. So we must assume their willingness to collaborate,with the european lefty cabal and legislate accordingly to protect our speech.

Please keep burning the light bright. The level of evil is astounding and we are all blessed that you have taken up the mantle to bring truth and light to this evil . The level of complicit behavior from those that we might have trusted in the past is truly astounding !!