Nudge and Behavioral Governance- Systematic Government Thought Modification

Beyond surveillance, beyond traditional propaganda, your government is algorithmically molding your thoughts, opinions, and beliefs.

Introduction

Have you noticed how, in the current political climate, many people seem “triggered” by various issues, leading to irrational, exaggerated responses? This behavioral “triggering” is often engineered, driven by governmental, political party, or NGO deployment of nudge campaigns.

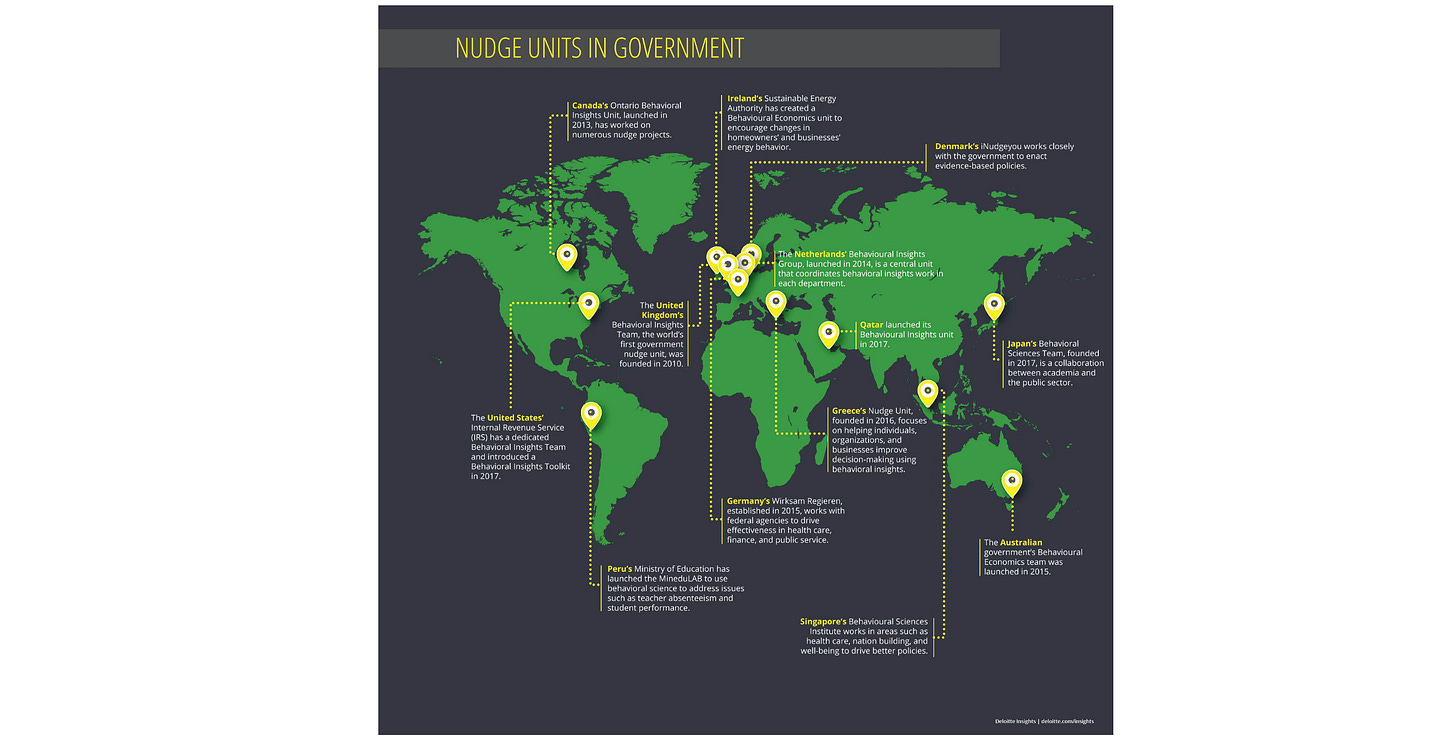

The use of “nudge” technology, the strategic application of behavioral psychology, data analytics, and subtle digital manipulation to steer citizen behavior, has become ubiquitous over the past decade, particularly in Western “democracies.” What began as a modest academic concept under behavioral economics has evolved into sprawling governmental architectures of influence operating largely without public awareness or oversight.

Nudge technology refers to the deliberate design and use of psychological, informational, or technological cues that subtly steer people’s choices and behaviors without overt coercion or explicit mandates. The concept emerged from behavioral economics—popularized by Richard Thaler and Cass Sunstein’s Nudge (2008)—which argued that human decision‑making is heavily shaped by context, defaults, and emotional triggers rather than rational calculation.

At its core, nudge technology operationalizes “choice architecture”: policymakers, corporate designers, or algorithm engineers arrange the environment so that one option becomes the easiest, most visible, or most emotionally appealing path. For example:

Automatically enrolling workers into pension plans rather than requiring them to opt in.

Displaying calorie counts or carbon scores next to food items to socially prompt “better” choices.

Using “reminder notifications,” fear‑based health messages, or “safety badges” on social media to manipulate opinions and behavior.

One of many examples of how the CDC is using technological nudging is its campaigns promoting vaccine uptake. In the current case above, it was coupled to a non-CDC-produced YouTube video discussing vaccines. In total, the CDC spends roughly 4–6 percent of its discretionary budget on behavioral science–driven persuasion, billions of taxpayer dollars over the last few years, largely for campaigns that used emotional manipulation rather than open scientific communication.

The agency justifies this spending as “improving public health messaging,” but what it actually funds is an expansive psychological compliance framework rather than classical public information. The CDC typically labels these projects not as “nudge programs” but as “Strategic Communication and Stakeholder Engagement,” “Behavioral Insights Evaluation,” or “Vaccine Confidence Initiatives.”

Behavioral governance is the systematic use of behavioral science, data analytics, and psychological design to steer citizen behavior toward government‑defined goals without overt coercion or explicit legal compulsion. It operates by embedding subtle cues: defaults into public policies and digital communication systems. Examples include emotional framing, social norms, reward and penalty structures, and algorithmic personalization.

Rather than persuading through open debate or legislating through enforceable law, behavioral governance shapes decisions at the subconscious level, leveraging insights from psychology, neuroscience, and large‑scale data to generate predictable social outcomes. The result is a modern form of soft control: governance through engineered choice environments that preserve the appearance of freedom while directing behavior in ways aligned with institutional interests.

Where Nudge and Behavioral Governance becomes technological is through digital data and AI integration; algorithms that personalize nudges using real‑time behavioral analytics. Governments and corporations now employ predictive modeling, micro‑targeted messaging, and engagement metrics to fine‑tune persuasion, from vaccine uptake to political sentiment to selling products, including medicines.

In essence, nudge technology is the hidden hand of digital behavioral control: it preserves the appearance of free choice while quietly exploiting cognitive biases to produce desired outcomes. This makes it ethically ambiguous and highly effective for shaping population behavior, but deeply concerning for autonomy, consent, and democracy itself.

By 2024, over 200 behavioral‑insight units were identified globally, coordinating via the OECD Behavioral Insights Network. More about OECD can be found here.

Surveys of OECD member governments show over 80 percent use behavioral techniques in at least one major policy area.

Many policies that appear “voluntary”, like organ donor defaults, vaccination reminders, or “safe browsing” modes, are in fact nudge-engineered defaults backed by intricate data analytics.

What is OECD?

The OECD Directorate for Public Governance supports policy makers in both OECD member and partner countries, by providing a forum for policy dialogue and the creation of common standards and principles. We provide policy reviews and practical recommendations targeted to the reform priorities of each government.

We provide comparative international data and analysis, to support public sector innovation and reform. Our networks include government officials as well as experts from the private sector, civil society organisations and trade unions.

We help governments design and implement strategic, evidence-based and innovative policies to strengthen public governance, respond effectively to diverse and disruptive economic, social and environmental challenges and deliver on government’s commitments to citizens. - OECD

Government use of nudge technology is no longer experimental; it is systemic. It represents a migration from open persuasion to covert psychological architecture, where the State seeks compliance not through debate but through design. In this sense, “nudge” has become the modern euphemism for behavioral governance; the art of managing perception in a “democracy” where the State no longer fully trusts its citizens to think independently.

“Behavioral governance” is how Western “democracies” systematically respond to the perceived threat to the status quo posed by populist movements.

Nudge is applied soft power disguised as convenience. In other words, Western governments have come to believe that it is acceptable to control their citizens' thoughts, beliefs, and the information landscape available to them, as a means of manufacturing political and social consensus that aligns with governmental policies.

In such a system, citizens no longer independently act as sovereign, autonomous individuals to select or influence public policy decisions via the ballot box. The government determines policy, then shapes citizens' thoughts and beliefs to align with and endorse those decisions. The State has determined that it knows best and must employ advanced psychological methods to manipulate citizens into compliance.

The Role of President Barack Obama in Promoting USG Use of Nudge Technology

Barack Obama played the pivotal role in embedding behavioral science (ergo nudge theory), into the U.S. government’s policymaking apparatus. Building on insights from behavioral economics, Obama sought to make public administration more “scientifically efficient” by steering citizens’ decisions through carefully designed psychological cues rather than direct mandates.

In 2015, he formalized this approach through Executive Order 13707, Using Behavioral Science Insights to Better Serve the American People, which instructed every federal agency to integrate behavioral research into its programs. That directive institutionalized the Social and Behavioral Sciences Team (SBST) within the White House Office of Science and Technology Policy, charged with redesigning communication, benefits enrollment, tax notices, and health campaigns to shape public choices subtly and predictably. With that step, Obama established the federal framework for “choice architecture”; an administrative system that uses emotional framing, default settings, and social‑norm pressure to influence behavior while maintaining an appearance of voluntariness. The model paralleled the U.K.’s Behavioural Insights Team and quickly spread through HHS, IRS, Education, and other agencies.

Using Behavioral Science Insights to Better Serve the American People has not been formally rescinded or revoked as a standalone act in the official Federal Register or by a subsequent Executive Order that explicitly lists EO 13707 among those revoked. It remains on the books as an existing Executive Order from President Barack Obama issued on September 15, 2015.

Although presented as improving government efficiency, this initiative entrenched behavioral governance as standard practice, blurring traditional boundaries between public information and psychological persuasion. In effect, in the United States, Obama converted nudge theory from an academic concept into a permanent state mechanism for managing citizen behavior through data‑driven suggestions rather than open debate or coercion, which continues today.

Obama Built the Censorship Machine — and Now It’s Backfiring

How Was “Nudge” Theory Transformed by the USG into Full-scale Behavioral Governance?

What began as simple “choice architecture” meant to improve efficiency in government services evolved into a system that uses surveillance data, algorithmic analytics, and emotional framing to shape public behavior across multiple domains, including health, climate, finance, and online speech. The shift accelerated after Barack Obama’s 2015 executive order institutionalized behavioral science in U.S. agencies, creating dedicated behavioral‑insight teams that optimized compliance through message design, digital defaults, and social‑norm pressure.

During the COVID‑19 era, that infrastructure merged with Big Tech platforms. Behavioral units within HHS, CDC, and CISA coordinated with social‑media companies to deliver tailored “nudges” at scale: adjusting visibility, tone, and emotional resonance of information to engineer consent. In this digital phase, the citizen became a behavioral dataset, monitored and modeled in real time, while the government acted as the unseen architect of perception. Thus, nudge theory’s benign language of “helping people make better choices” matured into a system of psychological governance; subtle, pervasive, and largely unaccountable, where democratic debate is supplanted by algorithmic influence and behavioral prediction.

Obama’s presidency marks the historical inflection point when behavioral economics was weaponized into behavioral governance. His executive order created permanent internal frameworks for manipulating citizen decisions under the pretext of efficiency. When the pandemic struck, that apparatus was merely turned outward and supercharged by social‑media integration.

In short, the “nudge” became an algorithm, and the citizen became a behavioral dataset. The goal was that no coercion would be required in the future, just guided cognition.

USG Use of Nudge Technology During COVID

During the COVID‑19 pandemic, a tightly networked alliance of governmental and private entities operationalized behavioral‑science methods to influence public attitudes and compliance. At the core was the U.S. Department of Health and Human Services (HHS) and its Behavioral Science and Analytics Division, which coordinated messaging research across agencies.

These units partnered with major contractors like the MITRE Corporation, which provided behavioral‑data infrastructure and flagged online narratives for “risk scoring,” linking government guidance with real‑time monitoring of social‑media responses. Meanwhile, Big Tech firms acted as amplification engines: Google, through its Jigsaw think unit, managed “pre‑bunking” campaigns and algorithmic ranking to pre‑empt content deemed misleading; Meta calibrated emotional tone and visibility of health posts to boost preferred narratives; and Twitter (now X) cooperated via content moderation agreements with HHS and CISA.

Private marketing agencies such as the Ad Council and Publicis Health executed nationwide campaigns crafted from these insights, embedding psychological triggers such as a sense of community duty and fear of exclusion. Academic partners, including Stanford’s Internet Observatory, Johns Hopkins, and the University of Washington’s Center for an Informed Public, supplied experimental validation and ethical justification. Together, this coalition formed a seamless state‑tech behavioral apparatus, uniting government policy goals with digital persuasion technology to manage perception and compliance on a national scale while largely bypassing transparent public debate.

For example, the CDC continues to use several behavioral science methods in vaccination campaigns to improve uptake and address hesitancy:

1. Nudges and Behavioral “Nudges”

The CDC applies nudge theory, subtle changes in how choices are presented, to encourage vaccination without restricting options. Examples include:

Social comparison nudges: Sharing data like “7–8 out of 10 people in your age group have gotten vaccinated” to reinforce social norms.

Influence-based messaging: Messages such as “Your vaccination can encourage others” (gain-framed) or “If you don’t vaccinate, others may follow” (loss-framed) leverage social influence.

2. Presumptive Communication

Shifting from participatory to presumptive language in healthcare settings (e.g., “Today we are vaccinating your child” instead of “Would you like to vaccinate today?”) increases compliance by framing vaccination as the expected norm.

3. Reminders and Recall Systems

The CDC supports automated reminders via text, email, or phone calls to reduce forgetfulness and procrastination. These are especially effective for childhood and seasonal vaccines.

4. Trusted Messengers

Using familiar and trusted sources, such as healthcare providers, community leaders, or peer advocates, improves message reception and vaccine confidence.

5. Social and Behavioral Research

The CDC conducts surveys, focus groups, and social listening to understand community attitudes, misinformation, and barriers to vaccination. This informs culturally appropriate messaging and interventions.

6. Framing and Messaging Strategies

Messages are tailored using behavioral insights:

Emphasizing vaccine safety, efficacy, and personal relevance.

Highlighting personal and community benefits (e.g., returning to normal life).

Using emotional associations through videos or images to increase engagement.

7. Healthcare Provider Engagement

Encouraging provider recommendations is a key strategy, as data show that adults are more likely to vaccinate when a healthcare professional recommends it.

These methods are applied across campaigns for COVID-19, flu, RSV, and routine immunizations, often informed by data from the National Immunization Survey and behavioral pilots, which are experimental programs designed to test which nudging techniques can change health behaviors, like getting vaccinated, before rolling them out more broadly.

USG Nudge Coordination with Private Sector, Academic, and Non-Profit Partners

MITRE Corporation

A leading government contractor managing systems for HHS, DHS, and DoD.

Ran the SQUINT program and the DISARM framework, software that flagged social‑media content for “risk scoring.”

MITRE SQUINT is a tool designed to help identify and report misinformation, particularly around elections and public health. Volunteers use the SQUINT app to capture and report suspicious social media posts, which are then verified by MITRE and shared with relevant election officials or public health authorities. The program was used during the 2020–2021 electoral season by the League of Women Voters of Colorado and has since been adapted for public health contexts.

DISARM (Disinformation Analysis & Risk Management Framework) evolved from the AMITT (Adversarial Misinformation and Influence Tactics and Techniques) framework, which was developed in collaboration with MITRE, Florida International University (FIU), and the CogSecCollab team. DISARM is modeled after MITRE’s ATT&CK framework and is used to describe, analyze, and counter disinformation campaigns using standardized tactics, techniques, and procedures (TTPs). It supports interoperability through STIX and TAXII standards and is now managed by the DISARM Foundation, a nonprofit dedicated to maintaining the framework as an open-source resource.

Provided back‑end analytics for HHS and CISA (Cybersecurity & Infrastructure Security Agency) to match behavioral data with engagement parameters like click‑through and dwell time.

Functioned as the unseen interface between federal narrative strategy and private content moderation.

Google / YouTube

Integrated behavioral tagging algorithms to de‑rank videos contradicting official guidance while amplifying content flagged as “confidence‑building.”

Provided ad‑space infrastructure for HHS campaigns and access to audience segmentation tools derived from users’ psychological profiles.

Google’s in‑house Behavioral Economics Team originated under the same philosophy as Obama’s nudge units and worked hand‑in‑glove with HHS contractors.

Meta (Facebook + Instagram)

Coordinated with the HHS communications team and the Data & Society Research Institute to push “prosocial health behaviors.”

Applied experimental “pro‑vaccination frames,” adjusting emotional tone of newsfeed content depending on user’s prior engagement history.

Platform data were shared in aggregated form with CDC analytics, feeding compliance dashboards showing county‑level sentiment shifts.

Twitter/X (at the time)

Engaged in “partnership agreements” with CDC and CISA.

Internal communications later revealed lists of “flagged influencers” based on behavioral risk profiles—a direct descendant of Obama‑era “social compliance segmentation.”

Algorithms received behavioral updates weekly to maximize “positive information cascades.”

Google’s Jigsaw / Alphabet‑affiliated Think Units

Specializes in “pre‑bunking”: forewarning users psychologically to reject specific narratives.

This is classical nudge methodology repurposed digitally: influence anticipation.

Jigsaw ran multiple quietly funded projects under CISA and HHS grants to “study misinformation contagion,” effectively manufacturing new nudges through cognitive inoculation.

Ad Council & Publicis Health

Executed emotionally charged ad campaigns tested with federal behavioral data pools.

Ads invoked community duty, family protection, and guilt triggers; perfect textbook nudges to drive behavior rather than independent assessment.

Academic Front Networks

Stanford Internet Observatory, Johns Hopkins Center for Health Security, and University of Washington’s Center for an Informed Public formed semi‑formal consortia with CISA and HHS to provide “evidence‑backed behavioral design.”

These centers operationalized real‑time message testing on platforms, evaluating “narrative virality decay” and recommending algorithmic throttling for undesired speech.

Much of this research was quietly funded under pandemic‑relief grants, effectively equating psychological management with emergency health infrastructure.

Mechanism of Coordination

Behavioral data flows from tech platforms → government contractors → HHS analytics.

Insights are fed into behavioral dashboards summarizing sentiment, compliance, and geographic variance.

Tailored nudge messages (emotional appeal, identity framing, fear modulation) pushed back through ad networks, influencers, and “trusted messengers.”

Algorithms are continually adjusted based on feedback loops. Therefore, public compliance became a measurable product.

Summary

Governments, corporations, and NGOs increasingly use nudge technology: the calculated application of behavioral psychology, data analytics, and digital design, to manipulate human decision‑making while preserving the illusion of free choice.

Once an academic idea within behavioral economics, nudging has expanded into a vast, politically integrated system of behavioral governance that subtly engineers public behavior through digital cues, emotional framing, and algorithmic personalization. Examples such as automatic enrollments, health reminders, and social‑media “safety badges” illustrate how environmental design shapes behavior without explicit coercion.

In one example, the CDC has invested literally billions of dollars into behavioral‑science‑driven persuasion campaigns under innocuous terms like strategic communication or vaccine confidence initiatives, reframing propaganda as health education. These programs exemplify how psychological compliance mechanisms have replaced transparent, evidence‑based dialogue.

President Barack Obama institutionalized nudge theory, the application of behavioral psychology and “choice architecture” to shape public conduct, transforming it from an academic idea into formal U.S. government policy. With Executive Order 13707 in 2015, Obama directed every federal agency to integrate behavioral insights into decision‑making, establishing the Social and Behavioral Sciences Team (SBST) within the White House.

This initiative, modeled on Britain’s Behavioural Insights Team, embedded psychological framing, social‑norm pressure, and default‑based design into federal communications, benefits programs, and public‑health messaging. What began as an efficiency project quietly became a behavioral‑governance framework, relying on emotional and subconscious influence rather than transparent persuasion. The Obama White House and instructed every agency to adopt behavioral research to improve program “efficiency.” In practice it established a national system of choice architecture, using defaults, emotional framing, and social‑norm pressure to influence citizen behavior under the guise of voluntary decision‑making.

Under this model, nudging evolved during the COVID‑19 era into a digitally integrated system of real‑time behavioral control. Agencies such as HHS, CDC, and CISA worked alongside private corporations. Private “partners” included Google, Meta, Twitter/X, MITRE, the Ad Council, and Publicis Health, and academic partners from Stanford, Johns Hopkins, and the University of Washington. These collaborations employed data analytics, predictive modeling, and algorithmic content ranking to micro‑target citizens with emotionally framed messages and pre‑bunking campaigns designed to pre‑empt dissent. Behavioral metrics were continuously captured and recycled into feedback loops that refined future compliance strategies.

Executive Order 13707 remains in place to this day and has not been rescinded by the current administration. The HHS CDC continues to apply Nudge and Behavioral Governance tools and technologies under the current leadership of Secretary Kennedy and Deputy HHS Secretary (and acting CDC Director) Jim O’Neill.

Obama’s institutionalization of behavioral science laid the foundation for a state‑tech fusion of psychological governance, where governments and digital platforms jointly manage perception and behavior at scale. The transition from “helping people make better choices” to engineering consent through algorithms marked a historical turning point: the rise of soft, data‑driven control disguised as public service. This framework, which is efficient, adaptive, and largely invisible, has redefined democratic communication, replacing debate and informed consent with predictive modeling and behavioral optimization.

After 2015, this framework matured into full behavioral governance, culminating during the COVID‑19 crisis when federal bodies such as HHS, the CDC, and CISA combined forces with Big‑Tech firms including Google/YouTube (Jigsaw), Meta, Twitter/X, contractors like MITRE, marketing giants such as Publicis Health and the Ad Council, and academic consortia at Stanford, Johns Hopkins, and the University of Washington. Together, they refined real‑time behavioral data, micro‑targeted messaging, and algorithmic content ranking to shape public opinion and compliance.

Conclusion

The rise of nudge technology signals a deeper evolution of Western “democracies” governance strategies toward soft control through engineered perception. By embedding behavioral cues into digital systems and policy frameworks, modern institutions increasingly govern not through law, reason, or debate, but through subconscious influence and algorithmic conditioning.

This shift erodes informed consent, weakens autonomy, and blurs the line between governance and manipulation. In a society ruled by behavioral engineering, the real challenge is not misinformation but quietly manufactured belief, where citizens mistake programmed emotion for conviction and state‑crafted reflex for independent thought.

Frankly, I saw this coming years ago during an interview on GB News, in which I encountered the logic of a UK government representative that, once elected, the UK government has the right to govern its citizens in this way. And it makes me mad as hell to see this being routinely implemented here in the USA- including by the current version of the CDC at which I work as an unpaid “Special Government Employee”.

Consider this:

Is the alternate media on vaccine safety having an effect? Or is this just more propaganda

Or is it all about the propaganda?

Queries suggest that Moderna’s recent vaccine trials have failed specific endpoints.

You decide…

Today, for the first time, all my emails from 'alternative' sources, like this and many more, have come with a spam warning. Another example of nudging. At least I can click "looks safe".

The use of tactics to persuade decisions has always been in place. If a person isn't drawn to understanding their psyche and others in return, the uneducated won't see the writing on the wall. When I am faced with what I perceive as inaccurate information, esp. emotional/mental, I start resourcing information about the topic to assist me in understanding the dynamic. Most people get uncomfortable (maybe) and read the newspaper and T.V. at the same time...or eat, drink to an excess, sexual release, or any number of unhealthy choices. I read these pieces of pertinent information from you and others, because of my "wanting to know how I and others tick". Thank you for the opportunity to learn!